Leveraging Enterprise AI and RAG-LLM Technologies for Business Transformation

Abstract

Enterprise Artificial Intelligence (AI) has emerged as a game-changer for organizations aiming to achieve operational efficiency, innovation, and market competitiveness. Combining the principles outlined in "Enterprise AI For Dummies" and "AI For Dummies," this white paper delves into the transformative potential of Retrieval-Augmented Generation (RAG) architectures powered by Large Language Models (LLMs). It provides practical use cases for Small and Medium Enterprises (SMEs) and Small and Medium Businesses (SMBs) in digital transformation. This document also includes a detailed RAG-LLM architecture diagram and an extensive reference list for further exploration.

Introduction to Enterprise AI

Enterprise AI involves deploying AI systems to automate, optimize, and enhance business processes. The adoption of AI is no longer limited to large corporations; SMEs and SMBs can now leverage AI’s potential for growth and innovation. With the rise of LLMs, such as OpenAI's GPT models, and advancements in AI technologies, businesses can solve complex challenges ranging from customer service optimization to predictive analytics.

What is Retrieval-Augmented Generation (RAG)?

RAG is an innovative architecture that combines the generative power of LLMs with external retrieval mechanisms. This hybrid approach overcomes the limitations of LLMs, such as outdated knowledge and hallucinations, by augmenting them with relevant and up-to-date data retrieved from external sources like databases, APIs, or knowledge bases.

Key Components of RAG Architecture:

- Retriever Module: Identifies and retrieves relevant information from external data sources.

- Generative Model: Uses the retrieved information as context to generate accurate and contextual responses.

- Feedback Loop: Continuously learns from interactions to improve retrieval and generation accuracy.

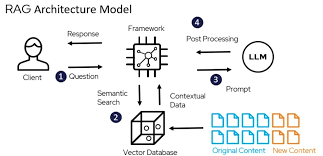

RAG-LLM Architecture Diagram

The following diagram illustrates the architecture of RAG-LLM systems:

Diagram Description:

- Input: User query or business data.

- Retriever: Connects to external data sources (e.g., databases, APIs).

- Generative Model: LLM processes the query and retrieved data to generate responses.

- Feedback Loop: Refines the system through user feedback and analytics.

Use Cases for RAG-LLM in SMEs and SMBs

1. Customer Support Automation

- Problem: High costs and inefficiencies in traditional customer support systems.

- Solution: Deploy RAG-LLM-based chatbots capable of retrieving accurate and contextual information to resolve customer queries in real time.

- Impact: Reduced operational costs, improved customer satisfaction, and faster response times.

2. Intelligent Knowledge Management

- Problem: Employees spend significant time searching for relevant information in large knowledge bases.

- Solution: Use RAG-LLMs to provide instant access to pertinent documents, policies, and procedures.

- Impact: Increased productivity and streamlined workflows.

3. Personalized Marketing Campaigns

- Problem: Generic marketing strategies fail to resonate with diverse customer segments.

- Solution: Leverage RAG-LLMs to generate personalized content based on customer data and preferences.

- Impact: Higher engagement rates and improved ROI on marketing efforts.

4. Fraud Detection and Risk Management

- Problem: Growing complexity in identifying fraudulent activities and assessing risks.

- Solution: Implement RAG-LLMs to analyze transaction data, detect anomalies, and suggest preventive measures.

- Impact: Enhanced security and minimized financial losses.

5. Product Development and Innovation

- Problem: Difficulty in identifying market trends and customer needs.

- Solution: Utilize RAG-LLMs to analyze market data and generate actionable insights for product development.

- Impact: Accelerated innovation cycles and better alignment with market demands.

Implementation Strategies

- Data Integration: Establish connections to diverse and reliable data sources for effective retrieval.

- Model Customization: Fine-tune the LLM to align with business-specific requirements.

- Monitoring and Feedback: Implement robust mechanisms to monitor performance and incorporate user feedback.

- Scalability: Design the architecture to scale with growing data and business needs.

Challenges and Mitigation

- Data Privacy: Ensure compliance with data protection regulations like GDPR and HIPAA.

- Infrastructure Costs: Leverage cloud-based solutions to reduce initial investments.

- User Adoption: Conduct training sessions to familiarize employees with AI systems.

Conclusion

RAG-LLM technologies present a transformative opportunity for SMEs and SMBs to drive digital transformation and achieve sustainable growth. By addressing real-world business challenges with innovative solutions, enterprises can unlock unprecedented value.

References

- "Enterprise AI For Dummies," Wiley Publishing.

- "AI For Dummies," Wiley Publishing.

- Harvard Business Review articles on innovation and growth strategies.

- OpenAI’s GPT documentation.

- "Retrieval-Augmented Generation: Enhancing AI with Real-Time Data," Research Paper.

- Google Scholar articles on RAG architectures.

- Industry case studies on AI adoption in SMEs and SMBs.

- Government reports on AI policy and data privacy guidelines.

PS Please submit your RFP to ias-research.com to explore further.